"Scientists created a minimally invasive brain–machine interface using functional ultrasound (fUS) to accurately map brain activity related to movement planning with a resolution of 100 micrometers. Credit: Caltech" (ScitechDaily, Reading Minds With Ultrasound: Caltech’s New Brain–Machine Interface)

The Caltech researchers worked with the brain-machine interface (BMI) that uses ultrasound for reading the mind. And the new systems can make it possible to turn thoughts into text.

Researchers at Caltech created a new AI-powered Brain Machine Interface (BMI) that can transform thoughts or EEG into written text. The term BMI is a synonym for the Brain-Computer Interface (BCI). Which means they are the same things. The Caltech advanced system makes the new types of language models possible.

The researchers can connect brain implants with the language models. And then humans can control the language model using the EEG. The ability to transform thoughts into text allows us to make a new input method for the text. The system can input this text into the language model, which is another name for AI.

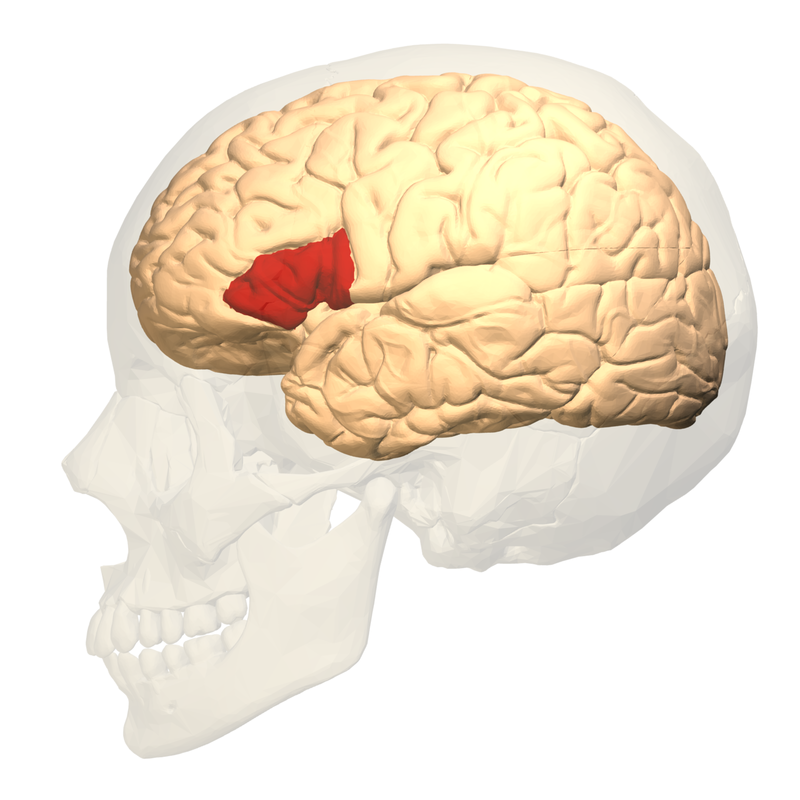

"Schematic drawing of the lateral view of the left hemisphere and the position of the classic Broca’s area defined as encompassing Brodmann’s areas (BA) 44 (yellow) and 45 (blue)."

"Broca's area (shown in red). Colored region is pars opercularis and pars triangularis of the inferior frontal gyrus. Broca's area is now typically defined in terms of the pars opercularis and pars triangularis of the inferior frontal gyrus. (N. F. Dronkers, O. Plaisant, M. T. Iba-Zizen, and E. A. Cabanis (2007). "Paul Broca's Historic Cases: High Resolution MR Imaging of the Brains of Leborgne and Lelong". Brain 130 (Pt 5): 1432–1441. doi:10.1093/brain/awm042. PMID 17405763.)" (Wikipedia, Broca's area)

The brain implant that turns EEG into text is quite "easy" to make. The system must just follow the EEG in the Broca's area near the temple. The Broca's area is the area in the human brain that controls speech.

The system must just transform the EEG to the text. When researchers teach that kind of system. They must connect EEG from the Broca's area to the right words. Then that system must write those words to the computer. The user can simply write words to the computer and then read them to the microphone.

The system can see the EEG in Broca's area. Or the user can simply read words from the screen. Then the system sees how Broca's area changes the EEG. And then it connects that word with the certain EEG sequence. When the AI learns those things, it can transform to use imagined texts. And the user must not talk anymore.

Two-way communication can created using tools. That connected to Wernicke's area which makes a person able to understand the impulses from the computer.

This kind of system can control things like Human Universal Load Carrier (HULC) type robots that can help paralyzed people operate normally in everyday life. But those systems can also used to control cars and even aircraft. The next-generation jet fighters might have BCI/BMI user interfaces. Researchers are working with BCI/BMI systems that don't require surgical operations.

https://tech.caltech.edu/2024/05/17/life-with-brain-implant/

https://www.iflscience.com/new-brain-implant-translates-imagined-speech-in-real-time-with-best-accuracy-yet-74205

https://scitechdaily.com/mind-control-breakthrough-caltechs-pioneering-ultrasound-brain-machine-interface/

https://en.wikipedia.org/wiki/Human_Universal_Load_Carrier

https://en.wikipedia.org/wiki/Wernicke%27s_area

Comments

Post a Comment